Legacy cloud storage was built for durability and cost efficiency—not to feed GPUs at AI speed. For teams chasing faster training and higher utilization, it’s become the persistent bottleneck that compute tuning alone can’t fix.

AI pipelines demand a radically different performance profile: high-throughput, low-latency reads that keep GPUs fed at line rate, support massive checkpoint files, and handle unpredictable, non-linear access patterns.

Conventional storage stacks—built on multi-tier designs and traditional cloud object storage—simply weren’t optimized for that. They were tuned for cost and capacity, not for AI throughput.

Here’s how those design tradeoffs show up in practice:

- HTTP-based I/O and deep tiering introduce micro-latency that compounds at scale

- Replication and data placement policies create unpredictable performance and slow recovery

- Hidden egress fees and overprovisioning inflate costs as datasets explode

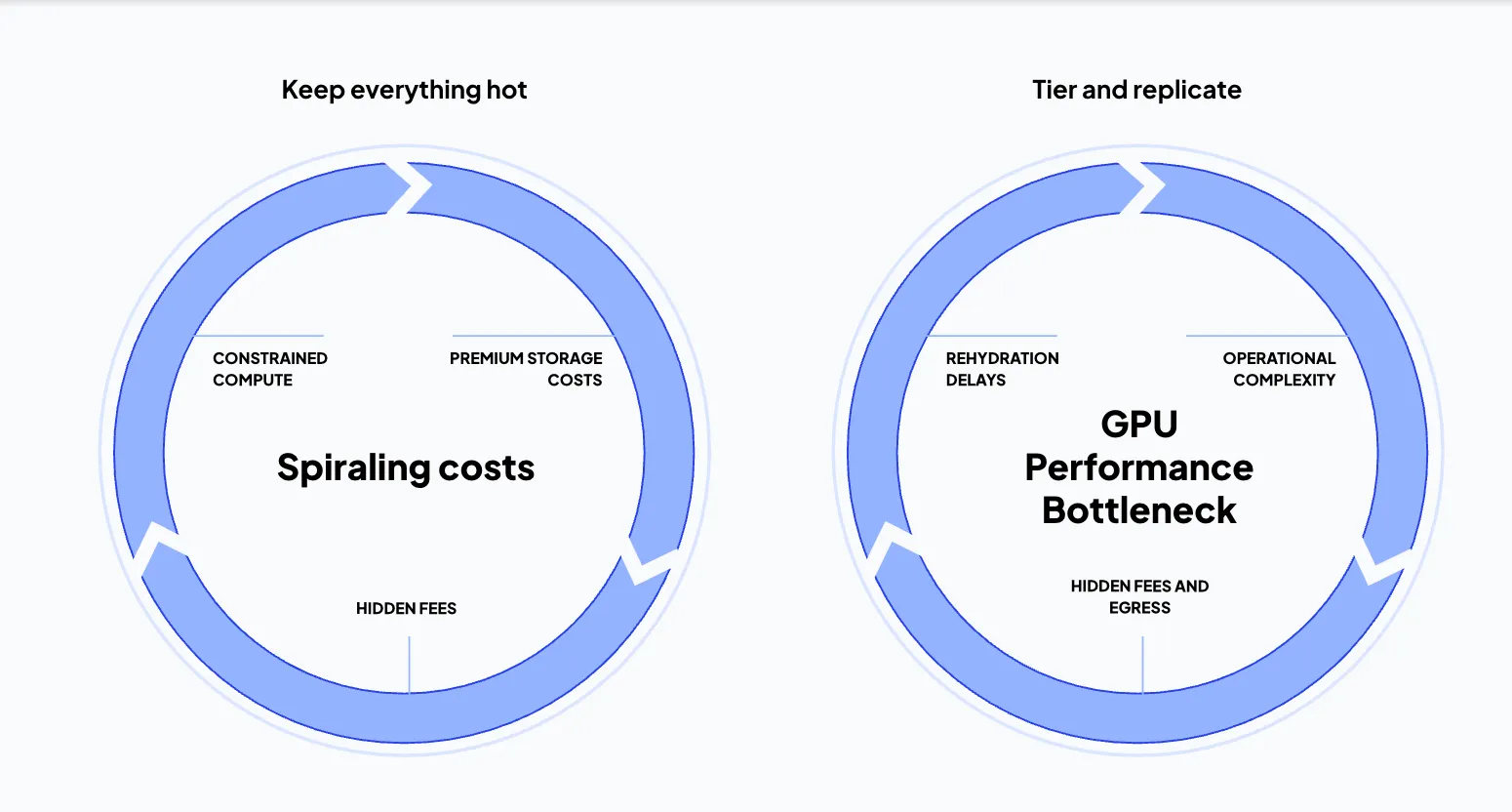

The result? Storage that looks cost-efficient on paper but proves deceptively expensive in practice. Teams are forced to either tier data and take the performance hit, or keep everything hot and absorb spiraling costs—often while locked into a single vendor to avoid egress penalties. Either path leads to inefficiency.

General-purpose storage strategies create cycles of inefficiency:

As workloads scale across regions and clouds, these inefficiencies multiply. The dynamics have turned storage into a silent limiter at AI scale—showing up as idle GPUs, throttled throughput, and runaway egress charges. A single training run waiting on data can idle hundreds of GPUs for minutes at a time—burning tens of thousands of dollars before an epoch even starts. Across training cycles, this compounds quickly.

For AI-first companies, object storage has become a high-performance layer of the optimization stack—not just a background utility.

How storage bottlenecks drain GPU utilization

No amount of GPU tuning can compensate for slow data access. When storage can’t keep pace, GPUs sit idle—consuming power and budget without producing progress.

In tiered storage environments, storage acts as a hidden gatekeeper on GPU utilization, often blurring lines between compute and I/O bottlenecks. What looks like a GPU constraint may be a data problem in disguise rooted in inefficient AI data pipelines that can’t move data fast enough to keep GPUs fully utilized. At scale, storage I/O limits ripple across the entire AI lifecycle:

- Training jobs stretch longer and consume more GPU hours

- Inference pipelines with large context windows hit the same latency wall

- Fine-tuning on fresh data reintroduces replication delays and cold-start penalties

Optimizing GPU utilization isn’t just about better schedulers or model tuning, it starts with low-latency storage that delivers data at line rate. Until that layer catches up, storage will remain a frontline blocker to both performance and cost efficiency.

The alternative is to trade efficiency for predictability by over-provisioning compute or keeping massive datasets “hot”. But those workarounds are costly and even many systems marketed as high-performance object storage still rely on replication schemes that introduce latency at scale.

Understanding these bottlenecks points to what AI-ready storage must deliver. So what does AI-ready storage actually look like?

Defining AI-ready object storage

Since most storage wasn’t built for AI, the question is what’s needed? To keep GPUs fully utilized and pipelines moving at production speed, AI data management and storage must match the pace, scale, and geography of modern workloads.

The next generation of AI platforms will be globally distributed by default. Storage must make that distribution seamless—delivering the same responsiveness and throughput no matter where data lives. In short, AI-ready storage should behave like local memory at global scale, serving as the foundation for performant AI data pipelines that keep models training and deploying without interruption.

CoreWeave flipped the storage model for AI-first speed

CoreWeave AI Object Storage wasn’t retrofitted for AI workloads. It rethinks storage from the hardware up, aligning data movement with GPU performance rather than cloud economics—delivering what next-generation AI storage solutions were meant to be.

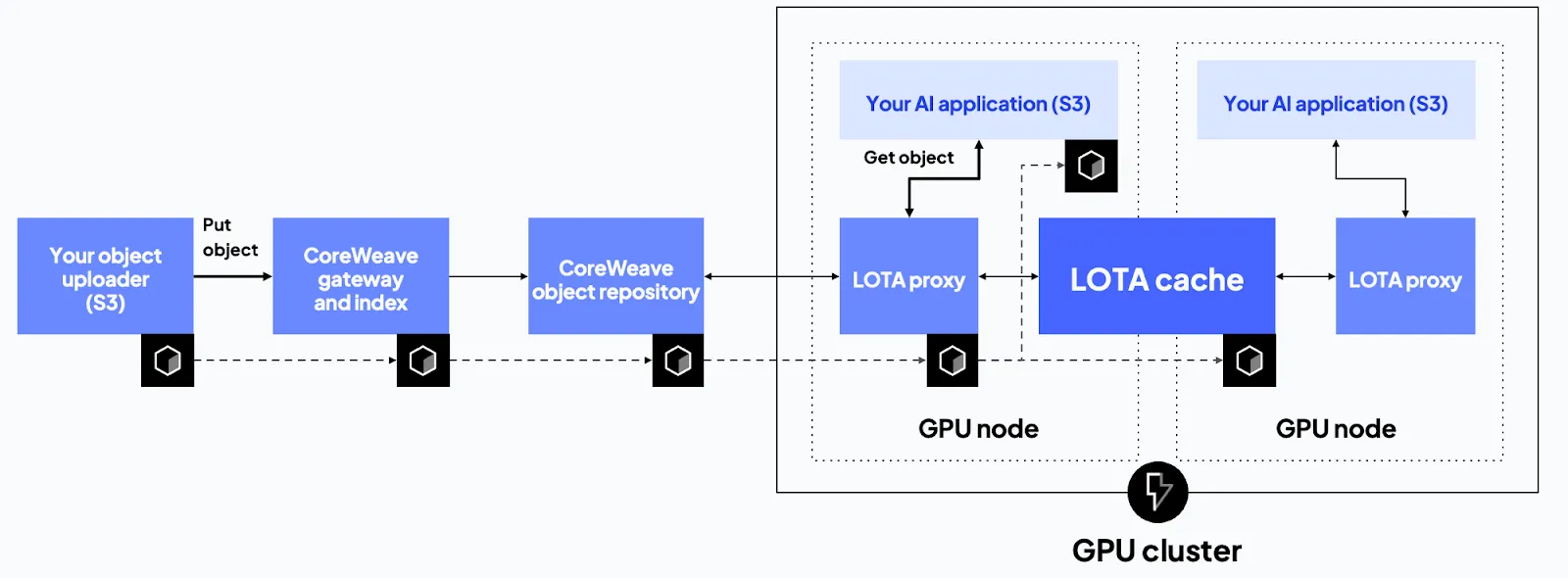

CoreWeave AI Object Storage delivers a high-performance architecture for AI workloads:

Purpose built for GPU performance

An InfiniBand backbone and Local Object Transport Accelerator (LOTA) caching deliver up to 7 GB/s per GPU, keeping GPUs fully fed while eliminating idle cycles.

One high-performance layer, everywhere

A unified data plane delivers a single layer of high-performance object storage that spans regions—and soon, clouds—simplifying AI data management by eliminating replication lag, silos, and manual synchronization.

Predictable and portable economics

Zero egress fees and transparent, usage-based pricing free teams to move fast without financial penalties. The new CoreWeave Zero Egress Migration (0EM) program even removes one of AI’s most expensive bottlenecks. It covers egress fees from other clouds and orchestrates secure, petabyte-scale transfers so teams can migrate data seamlessly and start building on CoreWeave without disruption or added cost.

Smart cost control

Adaptive hot, warm, and cold pricing automatically aligns storage charges with access patterns to cut storage costs up to 75% while maintaining consistent performance.

Together, these AI-native capabilities unlock more than just faster training, they enable you to:

- Run inference closer to users or across regions without re-architecting the data layer

- Fine-tune and evaluate models without moving massive files or waiting through cold starts

- Establish a consistent, high-throughput foundation for the entire AI lifecycle—from training to inference to continuous retraining

For AI-first companies, storage architecture is a strategic lever

As a pioneer at an AI-first company, you're building platforms that power the entire AI lifecycle—from training to deployment to continuous learning. Storage shouldn't limit that ambition.

As part of The Essential Cloud for AI, CoreWeave AI Object Storage turns storage into a strategic lever for utilization, velocity, and total cost of ownership—giving you the freedom to train, fine-tune, and deploy wherever opportunity demands.

Explore how CoreWeave’s AI-native storage unlocks performance and savings

Watch the on-demand webinar: How to Move Beyond Tiers, Tradeoffs, and Runaway Costs in AI Storage

Read the eBook: Beyond the Hot Tier: Cut AI Storage Costs While Accelerating What Comes Next

Read about the 0EM program: Set Your Data Free, No Egress Fees, No Catch: Introducing the CoreWeave Zero Egress Migration (0EM) Program

Have questions? Book a time to talk directly with a CoreWeave expert

%20(1).jpg)

.avif)

.jpeg)

.jpeg)

.jpg)