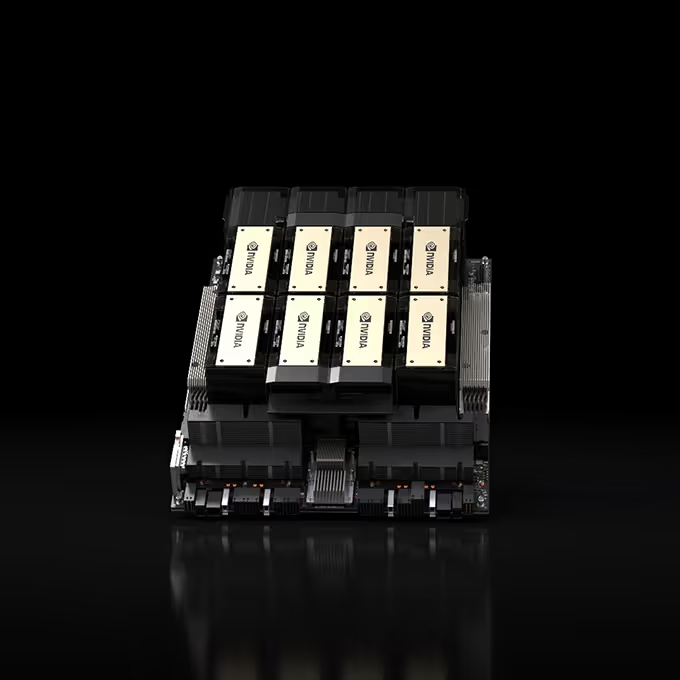

High-performance LLM inference

H200 doubles inference performance compared to H100 when handling LLMs such as Llama2 70B. Get the highest throughput at the lowest TCO when deployed at scale for a massive user base.

Industry-leading generative AI training and fine-tuning

NVIDIA H200 GPUs feature the Transformer Engine with FP8 precision, which provides up to 5X faster training and 5.5X faster fine-tuning over A100 GPUs for large language models.