Today, we are thrilled to announce that CoreWeave is the first cloud provider to bring NVIDIA H200 Tensor Core GPUs to the market. This latest launch adds to CoreWeave’s broad range of high-end NVIDIA GPUs, our growing portfolio of managed cloud services aimed at accelerating the development and deployment of GenAI applications, and CoreWeave’s position as the AI Hyperscaler™. In this blog, we will share technical details of CoreWeave’s H200 GPU instances, and why they provide the industry’s fastest performance. We’ll discuss, how our Mission Control platform helps with Cluster reliability and resiliency by managing the complexities of Cluster and Node lifecycle management, how customers can get up and running quickly via our managed Kubernetes service, and finally how customers can tap into our rich suite of Observability tools and services that provide transparency across all the critical system components to empowering teams to maintain uninterrupted AI development pipelines.

Background

Today’s news is another milestone in our record of firsts. In November 2022, CoreWeave was one of the first cloud providers to go live with NVIDIA HGX H100 instances, which are used to train some of the largest and most ambitious AI models. Not only were we one of the first to market with H100s, but we also broke records. According to the industry standard benchmark test, MLPerf v3.0, we built the world’s fastest supercomputer. Our supercomputer trained an entire GPT-3 LLM workload in under 11 minutes using 3,500 H100 GPUs–4.4x faster than the next best competitor.

CoreWeave has a long-standing collaboration with NVIDIA that was built through a track record of getting their industry-leading accelerated computing into the hands of end users at scale, and as quickly as possible. This is because our cloud was built from the ground up to support the most computationally complex workloads with an unwavering focus on performance at the core–meaning, we are unmatched in speed and scale, and we deliver at a pace that is required by the most demanding and ambitious AI labs and enterprises.

CoreWeave H200 Instances Overview & Performance

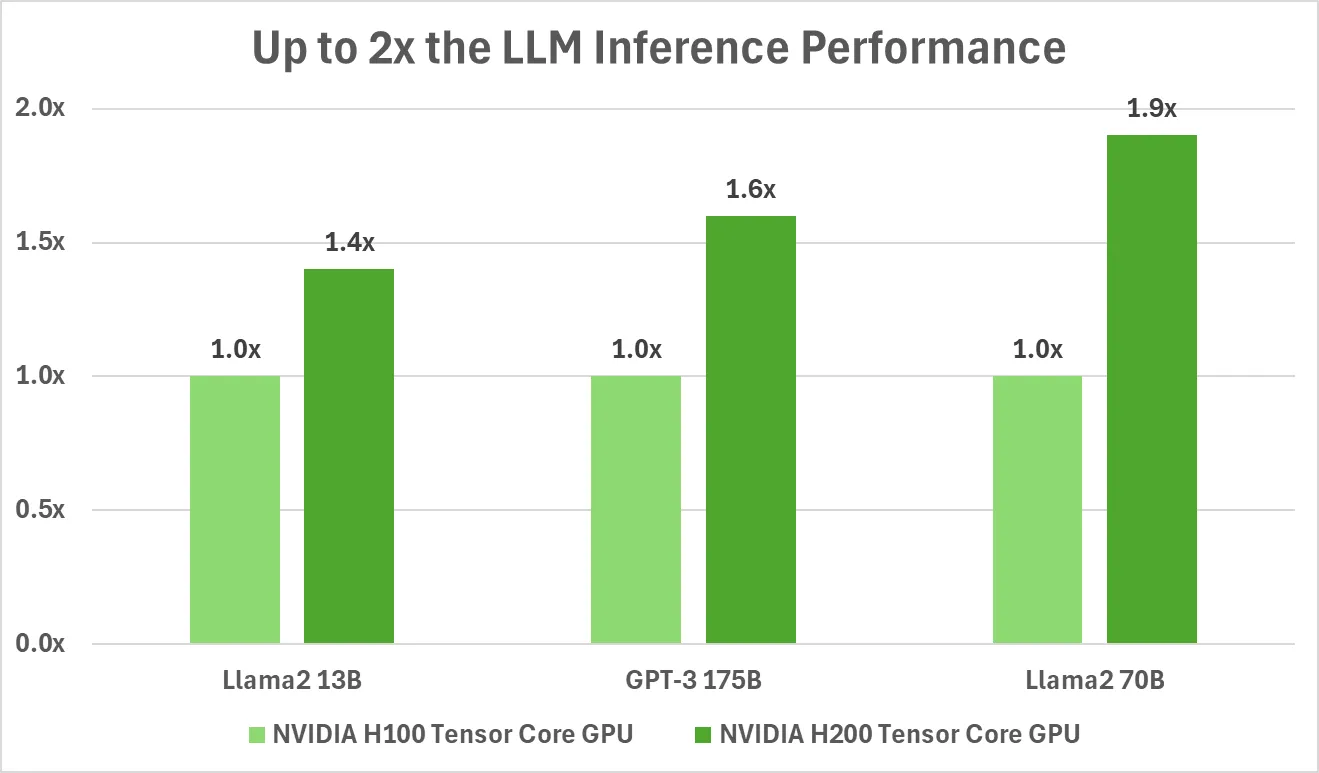

NVIDIA’s H200 platform is a step change for the AI industry and a class-leading product that is designed to handle the most computationally intensive tasks - from training massive generative AI models, delivering real-time inference at scale, to powering high-performance computing applications. The NVIDIA H200 Tensor Core GPU is designed to push the boundaries of Generative AI by providing industry-leading 4.8 TB/s memory bandwidth and 141 GB of HBM3e GPU memory capacity that help deliver up to 1.9X higher performance than H100 GPUs.

CoreWeave’s H200 instances combine NVIDIA H200 GPUs with Intel’s fifth-generation Xeon CPUs (Emerald Rapids); NVIDIA Bluefield-3 DPUs for offloading node networking, storage, and other management functions; and 3200Gbps of NVIDIA Quantum-2 InfiniBand networking. These instances are deployed in clusters with up to 42,000 GPUs to provide industry’s highest performance and enable customers to dramatically lower their time- and cost-to-train their GenAI models.

Mission Control

High-performance GPU infrastructure consists of numerous cutting-edge technologies coming together, such as the latest semiconductor process nodes, powerful compute chips, high bandwidth memory, high speed chip-to-chip interconnect and node-to-node connectivity. These technologies deliver an unprecedented amount of compute capabilities, with each generation providing 2-3X more raw compute power than the prior generation. This increase in performance is fundamental in pushing the capabilities of generative AI applications, but comes with additional overhead and complexities when dealing with node and system failures.

CoreWeave Mission Control offers customers a suite of services that ensure the optimal setup and functionality of hardware components through a streamlined workflow. It verifies that hardware is correctly connected, up to date, and thoroughly tested for peak performance. Advanced validation processes deliver healthier, higher-quality infrastructure to clients faster. Proactive health checking runs automatically when idle nodes are detected and swaps out problematic nodes for healthy ones before they cause failures. Extensive monitoring identifies hardware failures more quickly, shortening the amount of time to troubleshoot and restart training jobs, meaning interruptions are shorter and less expensive. The following image summarizes how CoreWeave’s Fleet LifeCycle Controller (FLCC) and Node LifeCycle Controller (NLCC) collectively work to provide AI infrastructure with industry-leading reliability and resilience, enabling us to build some of the largest NVIDIA GPU clusters in the world.

CKS & Managed Slurm on Kubernetes (SUNK)

To help customers quickly spin up large GPU clusters, CoreWeave provides CoreWeave Kubernetes Service (CKS) which provides blazing-fast performance, security, and flexibility in a managed Kubernetes solution. It is built from the ground up, specifically for AI applications, to offer unprecedented flexibility and control with optimal performance and security. CKS clusters leverage bare-metal nodes without a Hypervisor, which help in maximizing node performance, and NVIDIA BlueField DPU-based architecture for complete isolation and acceleration with cluster-private VPCs, making it ideal for AI workloads and a unique managed solution among hyperscalers.

To help customers easily run AI model training and inference jobs via CKS, CoreWeave provides an integrated experience for customers to run Slurm on Kubernetes (SUNK). SUNK integrates Slurm as a Kubernetes scheduler and allows for Slurm jobs to run inside Kubernetes. This creates a seamless experience, supports both burst and batch workloads on the same central platform, and allows developers to leverage the resource management of SLURM on Kubernetes. By deploying a Slurm cluster on top of Kubernetes (SUNK), on top of the same pool of compute infrastructure, customers have the flexibility to seamlessly use the compute infrastructure from either the Kubernetes or Slurm sides and concurrently run different types of jobs or workloads. Learn more about SUNK here.

Observability

CoreWeave Observability Services provide access to a rich collection of node and system-level metrics to complement the capabilities of Mission Control. Unhindered by a legacy software stack that limits capabilities of other AI infrastructure services providers, CoreWeave customers have access to detailed node-level and system-level metrics that quickly identify system faults and restore system-level performance. Faults can occur for various reasons, such as bad user configuration (memory allocation issues), misbehaving software updates, server component malfunctions, or issues in the high speed node-to-node network. Customers can collect and receive alerts on metrics across their fleet, with dashboards that visualize either the entire cluster or individual jobs, and identify root-cause issues in a matter of minutes. The following image provides an example of real-time dashboards that customers can create using CoreWeave Observability Services.

.webp)

Summary

We’re not slowing down. Soon, CoreWeave will also be among the first to market with the revolutionary NVIDIA Blackwell platform, which lets organizations everywhere build, train, and serve trillion-parameter large language models more efficiently.

We continue to be laser-focused on meeting the insatiable demand for our infrastructure and managed cloud services. Since the start of 2024, CoreWeave has set up nine new data centers globally, with 11 more in progress. In 2025, we anticipate starting work on 10 more on top of that. That is staggering progress.

We remain dedicated to pushing the boundaries of AI development and raising the bar for what’s possible when it comes to world-class performance at scale. We can’t wait to see what incredible innovations come to bear.

.webp)

%20(1).jpg)

.avif)

.jpeg)

.jpeg)

.jpg)