At CoreWeave, our mission is to power the creation and delivery of the intelligence that drives innovation. We’ve built the Essential Cloud for AI — a fully-integrated platform designed from the ground up so every layer works in harmony: from high-performance compute, low-latency networking, intelligent orchestration, and quickly accessible storage to the developer tools, security, and observability that bring AI applications to life.

Storage is where many AI teams still hit limits. Training and fine-tuning large models requires massive amounts of data located close to GPU clusters. Traditional storage systems weren’t built for that. They introduced latency, complexity, and hidden costs that slowed down progress.

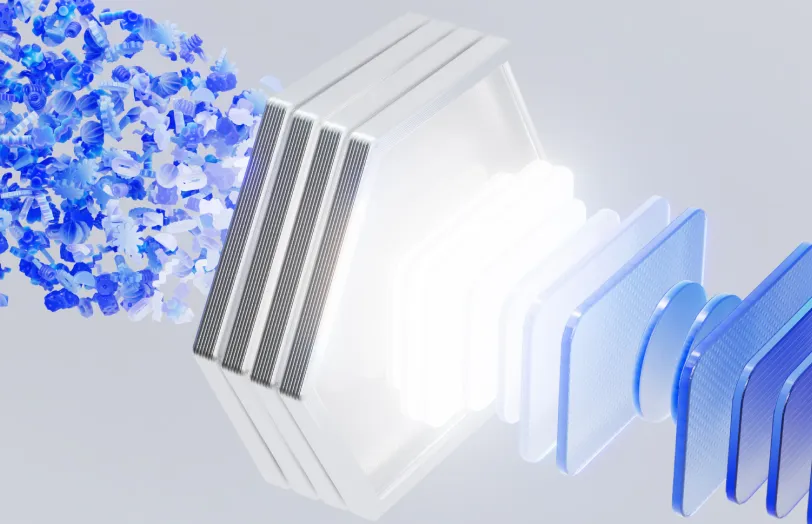

We created CoreWeave AI Object Storage to solve that problem. We built it as an AI-native storage solution designed alongside our compute and networking layers, optimized for throughput, scalability, and simplicity. It quickly became the foundation for our customers’ most demanding workloads, powering their training pipelines.

What’s New?

Today, we’re expanding what CoreWeave AI Object Storage can do for AI labs and enterprises in two key ways:

- Unified global datasets that can be used anywhere, with accelerated performance

- New automated usage-based pricing levels that lower our existing customers’ storage costs by more than 75% for a typical AI workload

These updates build on an already proven foundation and make CoreWeave AI Object Storage even more flexible and efficient for the next generation of AI workloads.

Unified Dataset Across Regions, Clouds, and On-Prem

AI teams need data mobility without friction. The ability to access, train, and deploy from the same dataset, anywhere, is critical for iteration speed and cost control. With the latest CoreWeave AI Object Storage, customers can work from one unified dataset across CoreWeave regions, other public clouds, and on-premises environments, with no replication overhead, punishing fees, or synchronization delays.

Our Local Object Transport Accelerator (LOTA) technology accelerates performance in any location, delivering an unmatched 7 GB/s per GPU throughput that scales linearly as workloads grow. This is how data moves at the speed of AI.

Flexible Pricing Built for Real AI Workloads

Performance matters, but affordability determines scale. With new, automated usage-based billing levels — Hot, Warm, and Cold — CoreWeave AI Object Storage lowers existing customers’ storage costs by more than 75 percent compared to our previous pricing. This new approach will automatically bill for data based on usage. No need to manually move data, manage this process, or pay for automation. No punitive data access fees for archived data. It just happens, instantaneously and transparently.

This approach has already helped several large-scale training customers reduce total storage costs by double digits while maintaining the same high throughput and reliability that CoreWeave is known for.

CoreWeave AI Object Storage’s combination of seamless access and persistent acceleration delivers significant benefits to developers, according to Holger Mueller, vice-president and principal analyst at Constellation Research. “With cross-region and cross-cloud acceleration, CoreWeave is delivering what developers need most—consistent, high-throughput access to a single dataset, without replication,” says Mueller. “Leveraging technologies like LOTA caching and InfiniBand networking, CoreWeave AI Object Storage ensures that GPUs remain utilized efficiently across distributed environments—a critical capability for scaling next-generation AI workloads.”

Designed for the Way AI Teams Work

Since launch, CoreWeave AI Object Storage adoption has grown rapidly, becoming the preferred storage layer for a majority of our large-scale model training workloads. Customers now use it not only for training but also for inference pipelines, agentic workloads, and hybrid AI environments.

Because it is deeply integrated with CoreWeave’s compute, networking, orchestration, and platform layers, CoreWeave AI Object Storage is more than just storage. It is a performance multiplier across the AI lifecycle.

These latest enhancements reflect what our customers value most:

- Velocity: the ability to iterate and deploy faster

- Scalability: no operational friction or data silos

- Efficiency: performance that scales while reducing cost

“High-performance, unified storage is an essential element of an AI cloud,” says Dave McCarthy, Research VP at IDC. “CoreWeave AI Object Storage delivers a single global dataset with cross-region performance that keeps GPUs efficiently utilized and eliminates costly data duplication. By combining predictable throughput with transparent, usage-based pricing and zero egress or replication fees, CoreWeave enables AI teams to significantly cut data costs while accelerating time to insight. This alignment of performance and efficiency gives CoreWeave an advantage as an AI-native cloud built for the economics of modern AI workloads.”

What’s Next?

With CoreWeave AI Object Storage, AI teams can now operate with a single global dataset that moves freely and scales intelligently. Starting October 16, we are introducing the general availability of our LOTA acceleration cross-regionally and will additionally expand to multi-cloud and hybrid environments in early 2026. Starting today, current customers will automatically benefit from our usage-based pricing, making their storage more than 75% more affordable.

This is how we continue to build the Essential Cloud for AI — by giving pioneers the freedom to innovate without constraint.

For those building what’s next, the right cloud isn’t optional. It’s essential.

.jpg)

%20(1).jpg)

.avif)

.jpeg)

.jpeg)

.jpg)