The rise of new large (and mega) language models with seemingly limitless applications are poised to change the world as we know it.

As LLM parameters evolve into the trillions, CoreWeave is diving deeper into the most promising and pragmatic models, offering advice to entrepreneurs, developers and researchers on the latest options to build and deploy NLP focused products and services as quickly and cost effectively as possible.

With Great Power Comes Great Infrastructure – How CoreWeave Can Help

CoreWeave aims to help companies harness the potential and navigate many of the common challenges associated with training, fine-tuning, and serving these models at scale.

Training

CoreWeave was NVIDIA’s first Elite Cloud Services Provider for Compute, and we take our commitment to offer the industry’s best distributed training infrastructure seriously.

By training in containers-on-bare-metal, libraries such as NCCL are able to get a proper view of the actual hardware architecture without the risk of a virtualization layer misrepresenting PCI buses and NUMA topologies.

Large scale training requires high bandwidth low-latency interconnect both inside a node, achieved via NVSWITCH in our A100 cluster, and between nodes in a distributed training setup. We build our A100 distributed training clusters with a rail-optimized design using NVIDIA Quantum InfiniBand networking, and in-network collections using NVIDIA SHARP to deliver the highest distributed training performance possible.

Our new integration with Determined.AI supports multi-GPU and multi-node distributed training out of the box with support for many popular frameworks, including GPT-Neo-X to train and fine-tune EleutherAI’s 20B model.

Fine-Tuning

No matter which model you wish to fine-tune, we have GPU options to match. Not all training workloads require A100s with Infiniband, but for those that do, we offer both 40GB and 80GB NVLINK options. NVIDIA A40 and A6000 GPUs provide 48GB of VRAM, allowing for large batch sizes, while being much less expensive to operate.

Serving

CoreWeave Cloud allows for easy serving of machine learning models, ranging in size from GPT-J 6B – available via our one click GPT-J app – to BLOOM. The models can be sourced from a range of locations, including CoreWeave Cloud storage, popular model hosting platforms like HuggingFace and other 3rd party storage environments.

For businesses looking for more control, CoreWeave’s InferenceService is backed by well supported open-source Kubernetes projects like KNative Serving. Optimized for fast spin-up times and responsive auto-scaling across the industry’s broadest range of GPUs, our clients find our infrastructure to deliver a 50-80% lower performance-adjusted cost compared to the legacy public clouds.

We also offer a fully managed API – via Goose.ai – to serve pre-trained, open-source models. With GooseAI, you’ll get an optimized inference stack running on CoreWeave Cloud without any DevOps overhead.

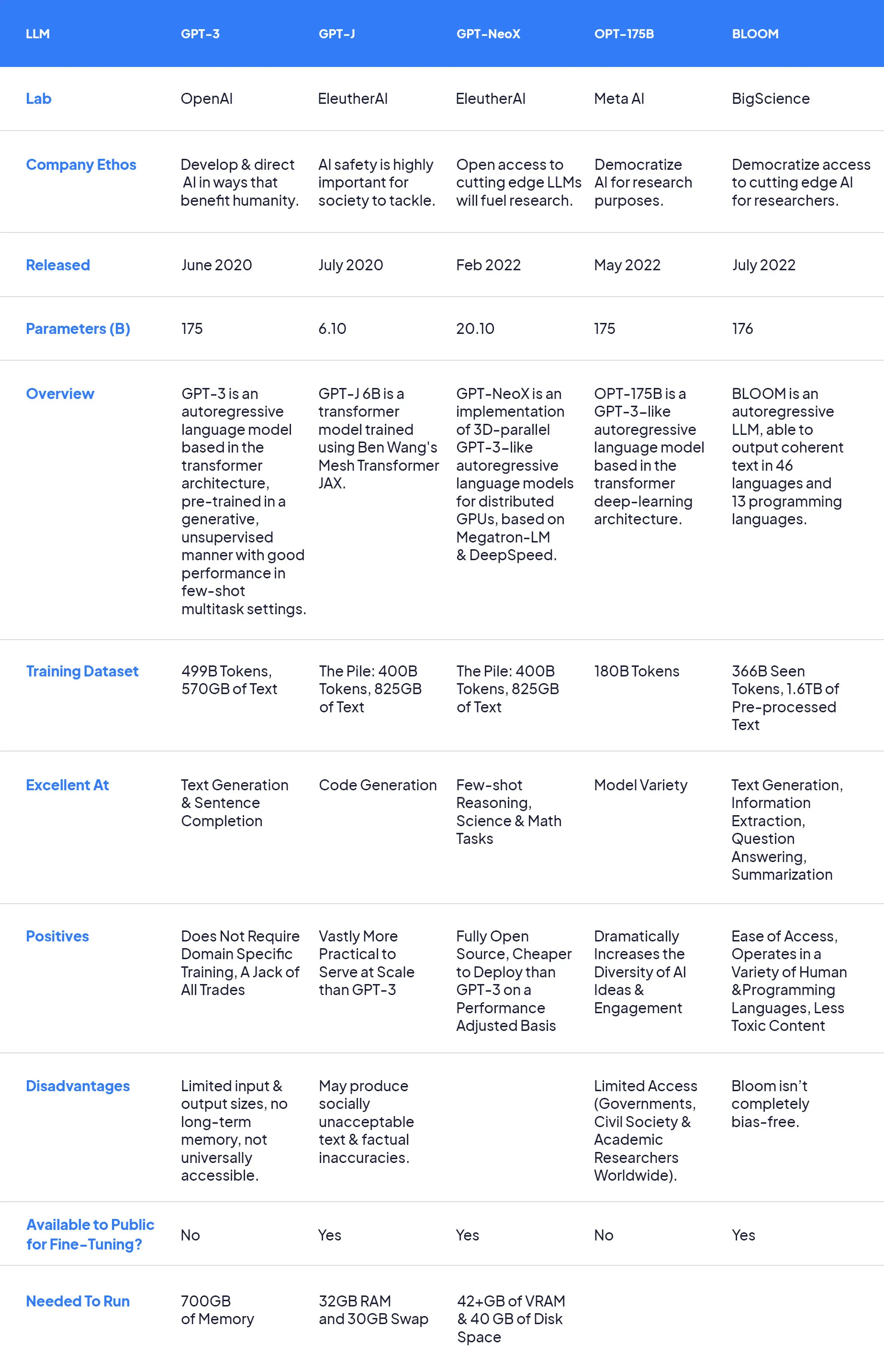

How Do Latest Large Language Model’s Stack Up?

As LLM usage grows, more and more of our clients ask how they should think about training, serving, fine-tuning and deploying them

If you are a developer who is currently working with or evaluating which large language models are right for your use case, we’ve put together our thoughts on the nuances, advantages and disadvantages of the most important and promising models.

Spotlight – BLOOM 176B

While other LLMs have hidden their models from the public and kept their code secret, BLOOM 176B is open to the world. The 1,000 researchers who created BLOOM, in partnership with HuggingFace and the French government, have blown the door wide open on open-source AI.

What’s most impressive about this model is that it can process 46 human languages (French, Catalan, 13 Indic languages, 20 African languages and more), in addition to 13 programming languages. Including such a wide variety of languages is another way BLOOM is helping to democratize LLMs.

Click here to see how you can deploy BLOOM as an InferenceService on CoreWeave.

About CoreWeave

CoreWeave empowers clients to serve LLMs by solving all of the associated tech limitations and computational challenges.

In partnership with companies like EleutherAI and Bit192, we strive to support important LLM work that is open to the world, so that researchers and entrepreneurs can continue to push the boundaries of what is possible.

Get In Touch

CoreWeave is partnering with companies around the world to fuel AI & NLP development, and we’d love to help you too! Get started by speaking with one of our engineers today.